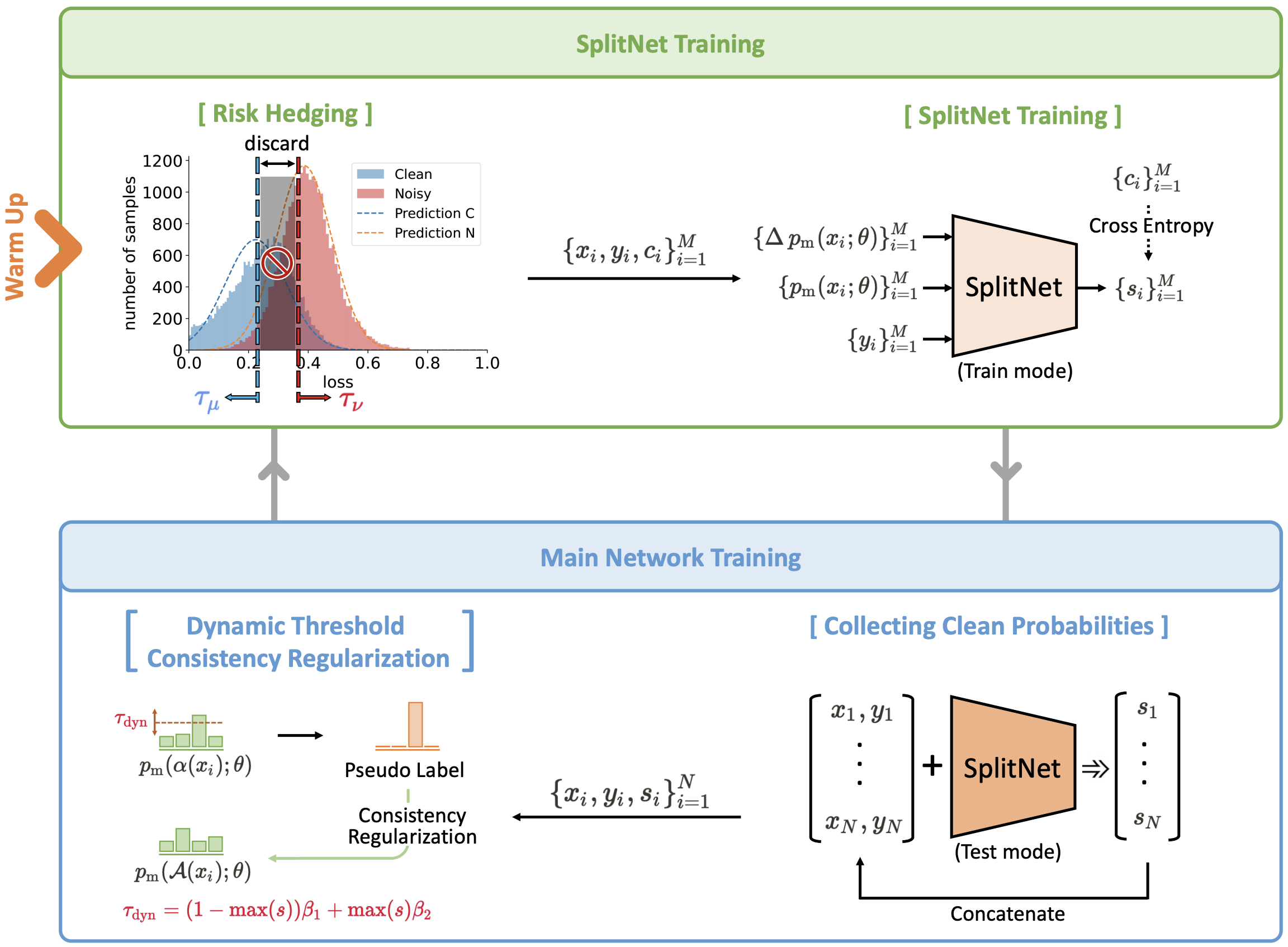

Overall Architecture

After training the main model through a warm-up, we use the proposed risk hedging process to only select confident samples to train SplitNet. With SplitNet, we obtain clean probability and split confidence, and with this information, we train the main model through SSL. Loss distribution generated by the main model is used in risk hedging. The main model and SplitNet can be alternately improved through this iterative process.

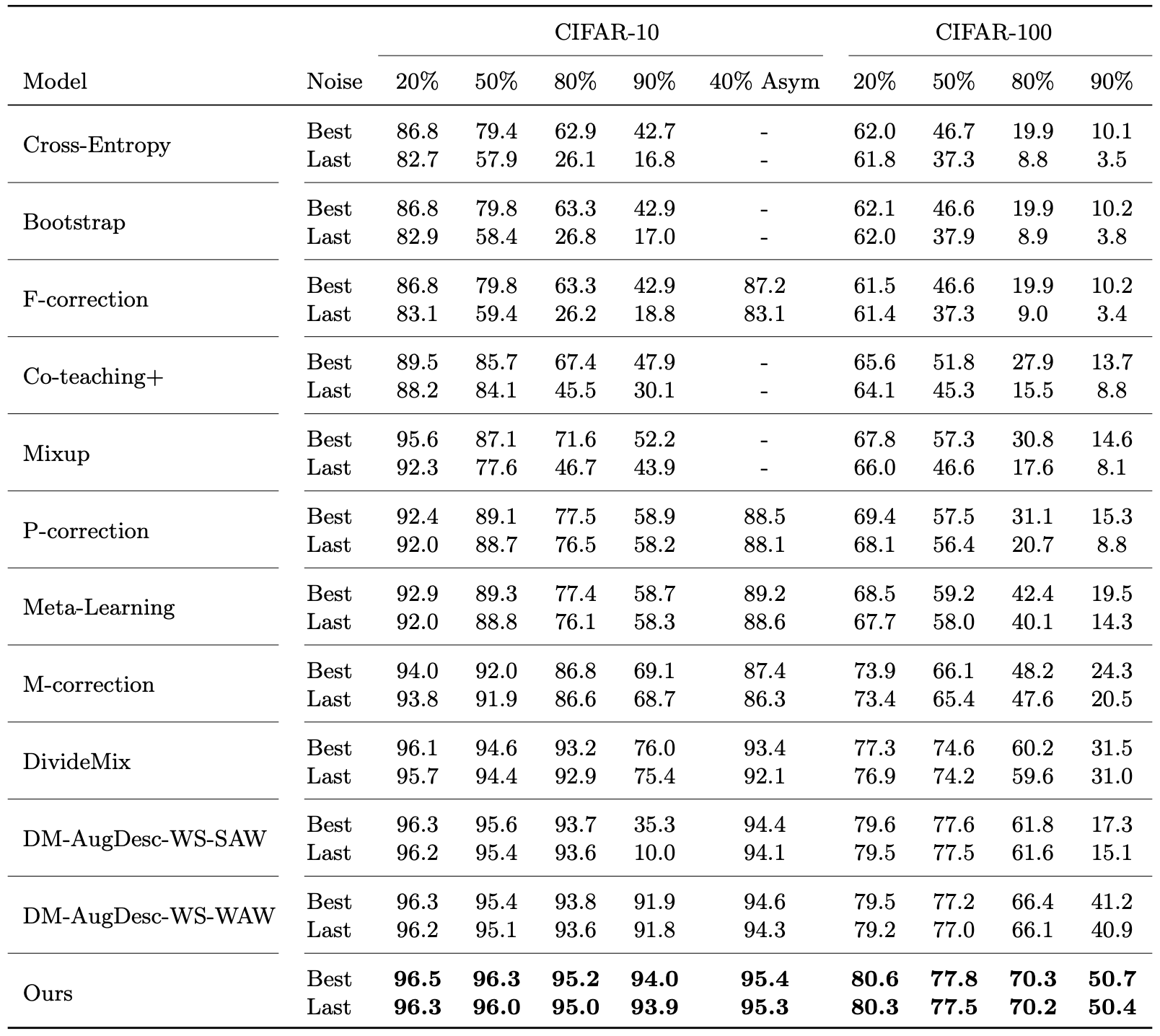

Experimental Results

CIFAR-10/100

We report substantial improvements in performance across all evaluated benchmarks, with the increases in performance becoming even more evident in cases where more challenging strong noise ratios are used. Note that compared to DivideMix and AugDesc, where well-performing hyper-parameters differ for cases depending on the strength of the noise ratio, and specifically compared to AugDesc, which has separate well-performing models for cases depending on the strength of the noise ratio (i.e., DM-AugDesc-WS-SAW and DM-Aug-Desc-WS-WAW), our method enhances performance using a single model.

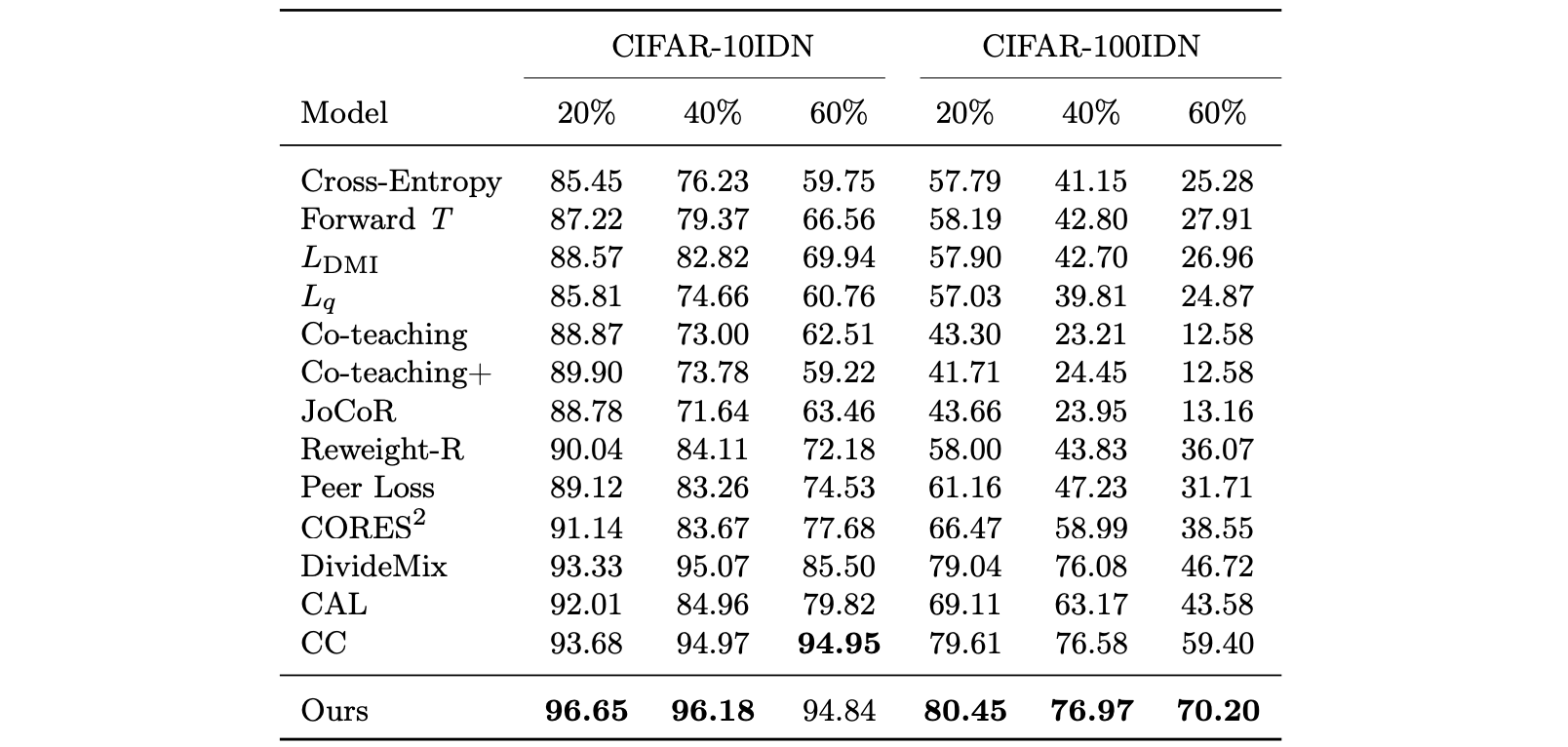

CIFAR-10/100 IDN

CIFAR10IDN and CIFAR-100IDN are datasets that have synthetically injected part-dependent label noise into CIFAR-10 and CIFAR 100, respectively. They are derived from the fact that humans perceive instances by breaking them down into parts and estimate the IDN transition matrix of an instance as a combination of the transition matrices of different parts of the instance.

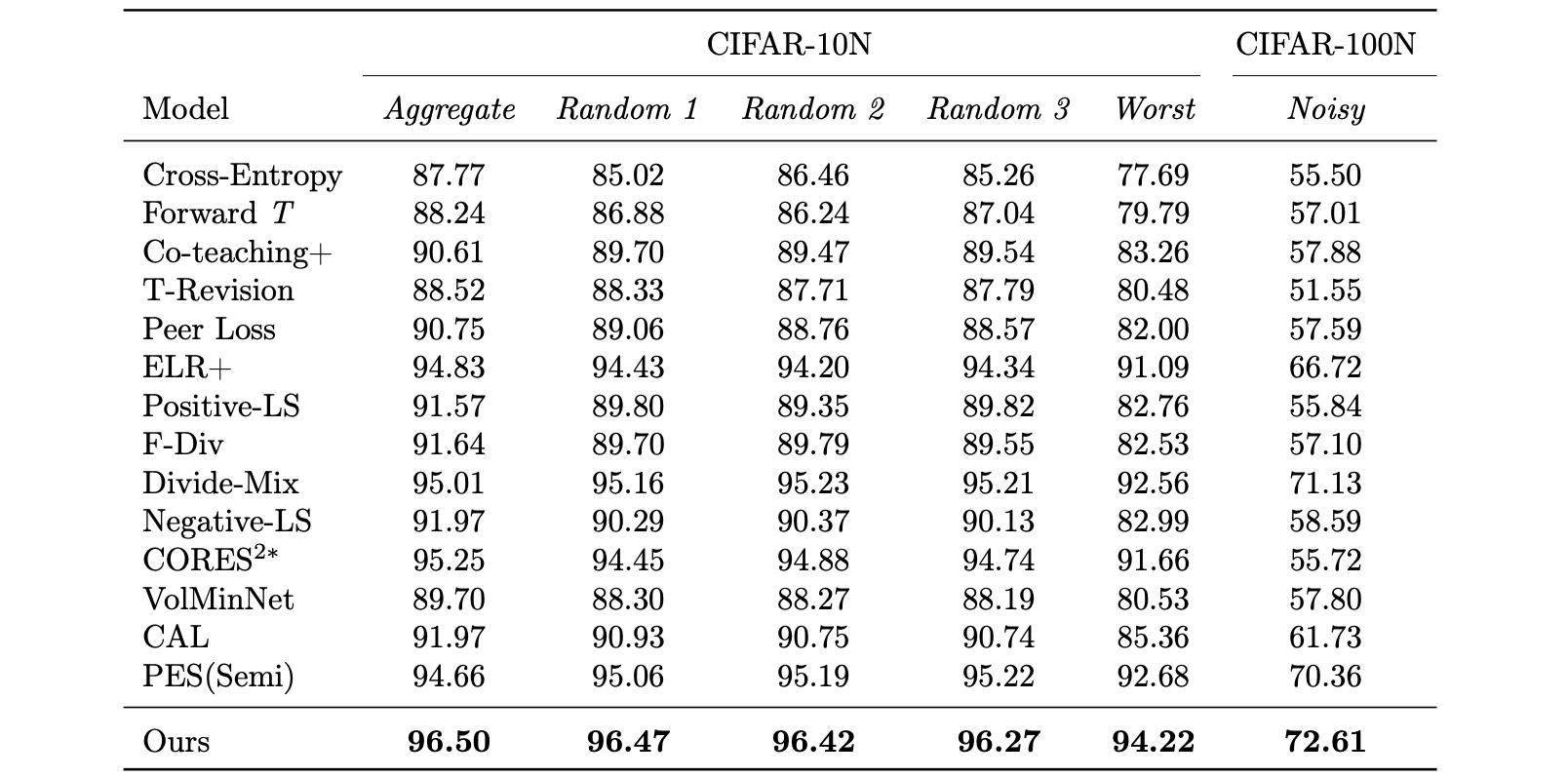

CIFAR-10/100 N

CIFAR-N equips the training datasets of CIFAR-10 and CIFAR-100 with human-annotated real-world noisy labels, which are collected from Amazon Mechanical Turk. Unlike existing real-world noisy datasets, CIFAR-N is a real-world noisy dataset that establishes controllable, easy-to-use, and moderated-sized with both ground-truth and noisy labels.

Analysis

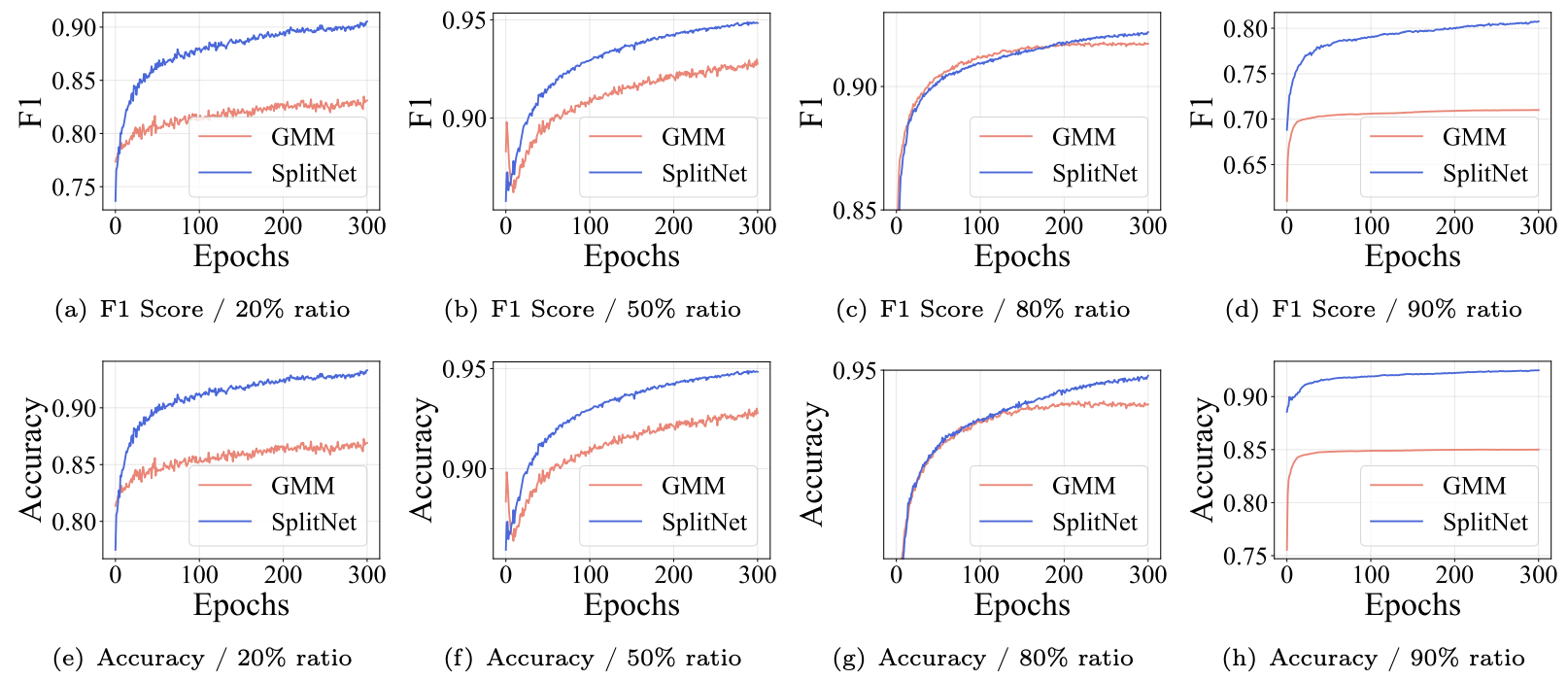

Accuracy and F1 Score

(a), (b), (c), and (d) are the F1 score when the noise ratios are 20%, 50%, 80%, and 90%, respectively. (e), (f), (g), and (h) are the accuracy when the noise ratios are 20\%, 50\%, 80\%, and 90\%, respectively. For all noise ratios, the F1 score and accuracy of SplitNet are higher, which means that SplitNet selects more actually clean data.

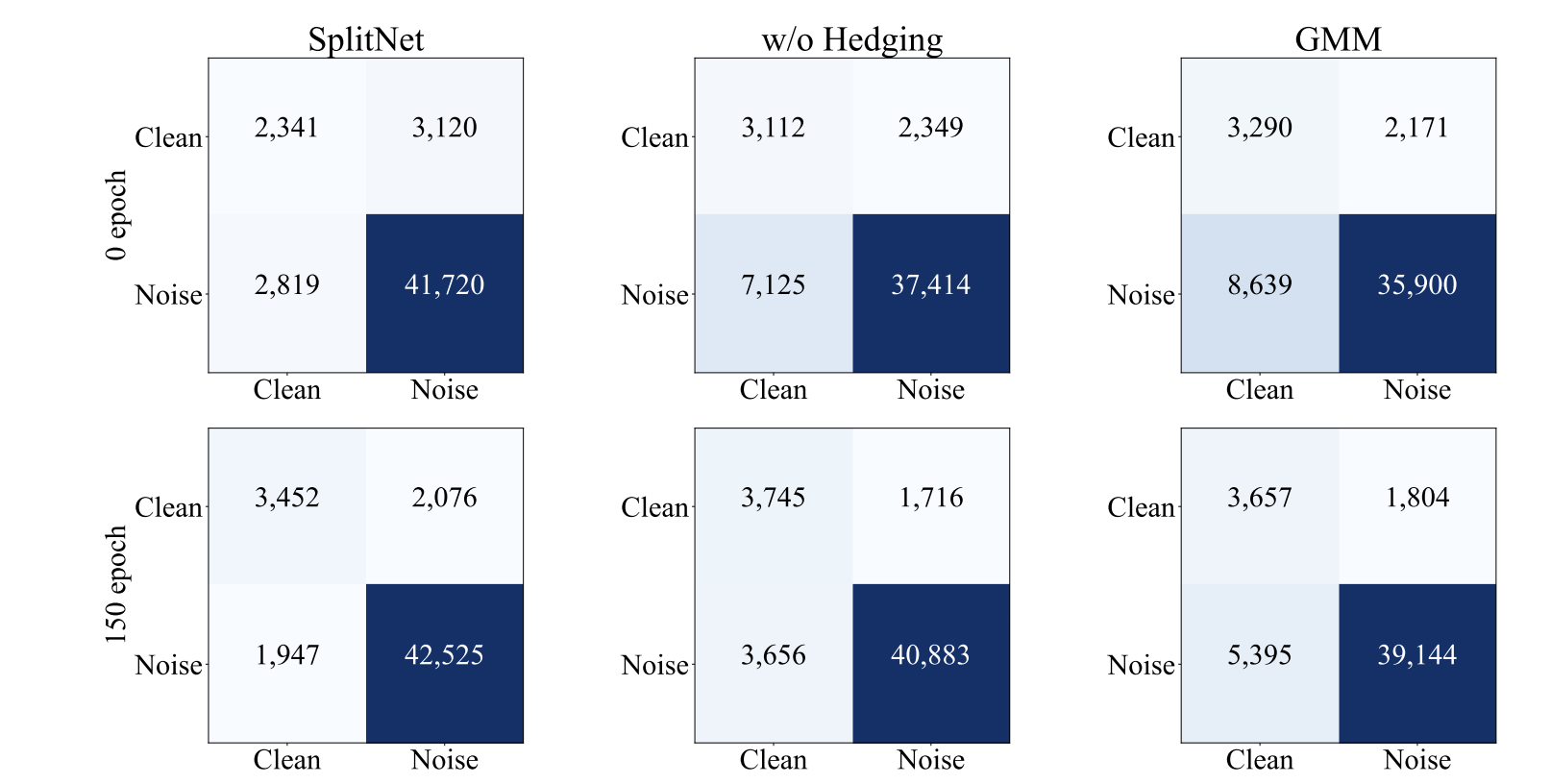

Confusion Matrix

The horizontal axis represents prediction, and the vertical axis represents ground truth in each confusion matrix. The far-left column shows the results of SplitNet trained through hedging after warm-up, the middle column shows the results of SplitNet trained with data filtered with a fixed threshold, and the far-right column shows the results of GMM. The top row shows results at 0 epoch, and the bottom row shows results at 150.