|

|

|

|

|

|

|

|

|

|

|

|

|

| We propose MaskingDepth, a novel semi-supervised learning framework for monocular depth estimation to mitigate the reliance on large ground-truth depth quantities. MaskingDepth is designed to enforce consistency between the strongly-augmented unlabeled data and the pseudo-labels derived from weakly-augmented unlabeled data, which enables learning depth without supervision. In this framework, a novel data augmentation is proposed to take the advantage of a na ̈ıve masking strategy as an augmentation, while avoiding its scale ambiguity problem between depths from weakly- and strongly-augmented branches and risk of missing small-scale instances. To only retain high-confident depth predictions from the weakly augmented branch as pseudo-labels, we also present an uncertainty estimation technique, which is used to define robust consistency regularization. Experiments on KITTI and NYU-Depth-v2 datasets demonstrate the effectiveness of each component, its robustness to the use of fewer depth- annotated images, and superior performance compared to other state-of-the-art semi-supervised methods for monocular depth estimation. Furthermore, we show our method can be easily extended to domain adaptation task. |

|

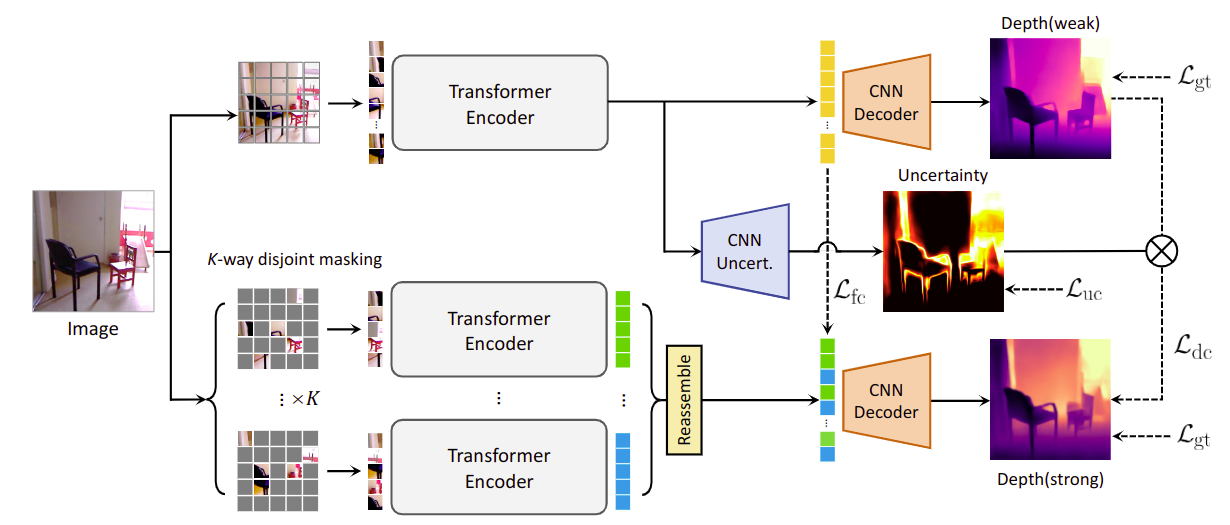

| Our proposed method consists of two main components; a branch using full tokens (top), and a branch using \(K\)-way disjoint masked tokens (bottom), where \(K\)-number of subsets are encoded independently and concatenated before decoding. We use consistency loss \(\mathcal{L}_\mathrm{dc}\) to make predictions between original and augmented images consistent, aided by an uncertainty measure. Feature consistency loss \(\mathcal{L}_\mathrm{fc}\) is also applied to facilitate the convergence. |

|

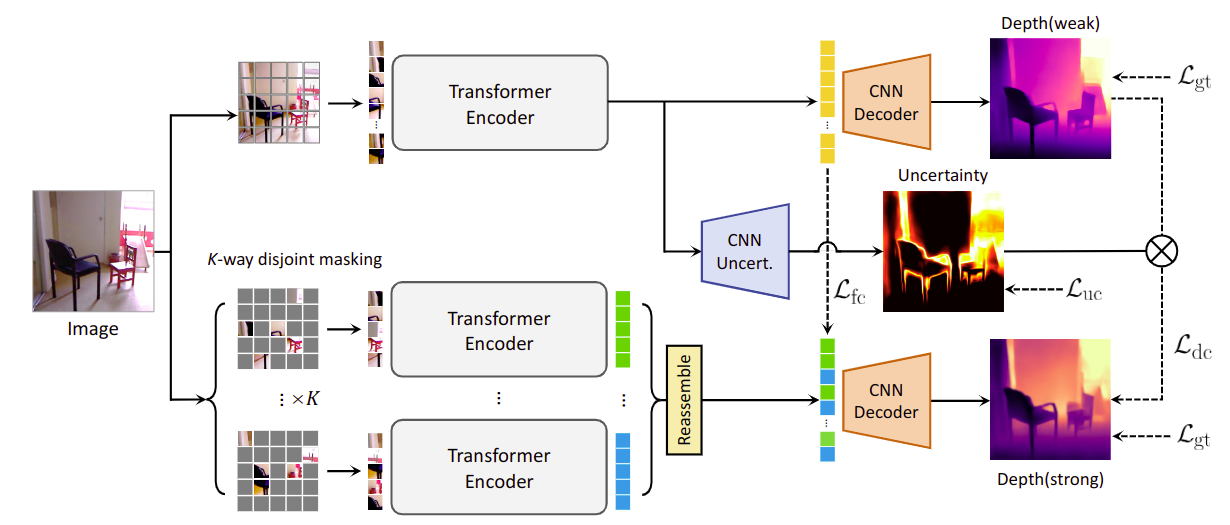

| (a) RGB image and its masked one. The first row of (b) and (c) are na ̈ıve masking results, and the second row of (b) and (c) are our masking results. In (d), we visualize the difference map between (b) and (c). We denote the mean scale difference in the boxed area. Our method better generates scale-consistent results, which in turns helps to better learn the monocular depth estimation networks. |

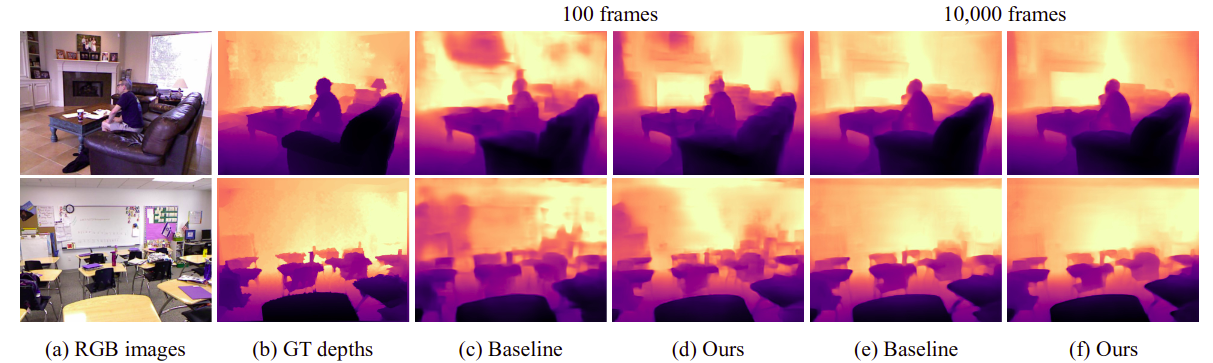

Sparsely Supervised Results on NYU

|

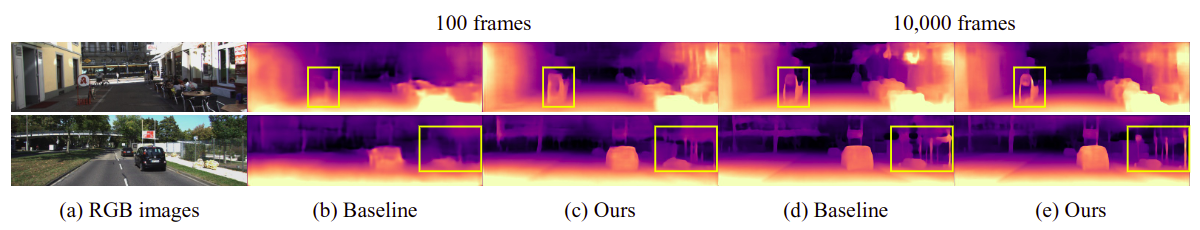

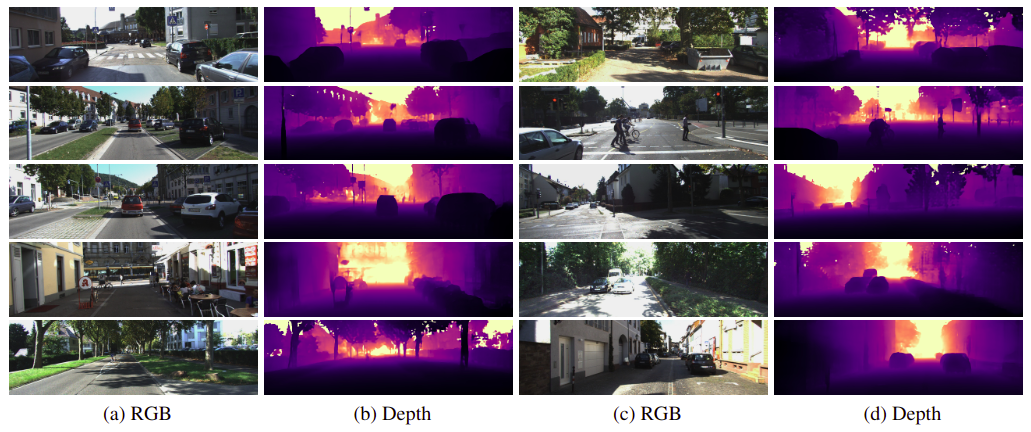

| Qualitative results on the KITTI dataset. (a), (c) RGB image, and (b), (d) depth map. Our framework proves to work well in domain adaptation task on real-world images. We simply apply our framework to unsupervised domain adaptation methods using virtual KITTI (vKITTI) and KITTI as synthetic and real datasets respectively. |

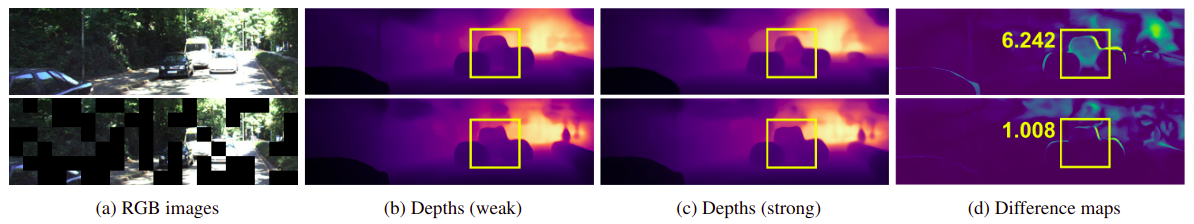

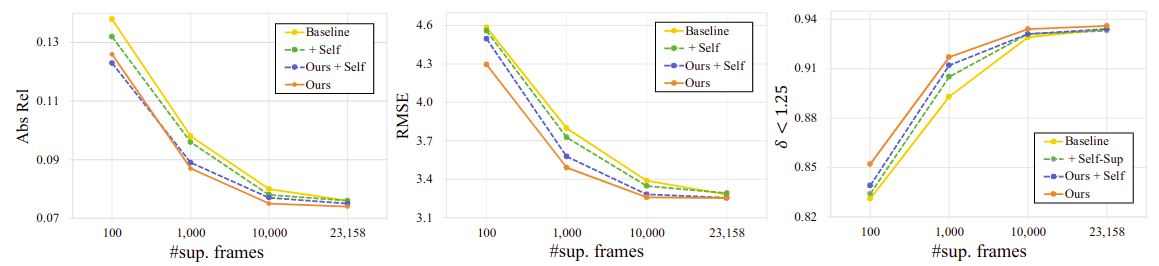

| Quantitative results on the KITTI dataset in a sparsely-supervised setting. ‘Baseline’ only uses a sparse depth, and ‘Self’ indicates existing self-supervised strategies. ‘Ours’ indicates the proposed semi-supervised learning framework. Our proposed method outperforms the baseline with any different number of data frames. As this amount is decreased further, performance begins to degrade rapidly. This is mostly due to the model's inability to learn the appropriate scale and structure of the scene with such sparse information. However, as the labels become sparser, the performance degradation of our proposed method progresses more slowly compared to the baseline or naive semi-supervised approach using self-supervised losses, and the performance gap gets larger. |

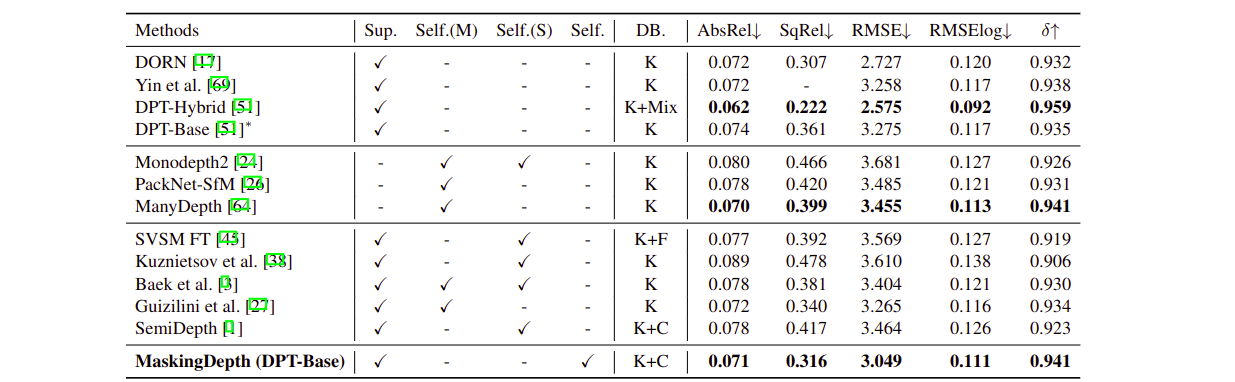

| Quantitative results. comparing our method against the existing approaches on the Eigen split of the KITTI dataset, using improved ground truth. `Sup.', `Self.(M)', and `Self.(S)' indicate supervised, existing self-supervised strategies on video and stereo pairs, respectively. `Self.' denotes our proposed consistency regularization, which needs no stereo images or video sequences. `K', `C', and `F' indicate KITTI, Cityscapes, and FlyingThings3D, respectively. 'Mix' indicate the dataset proposed from DPT, which is approximately 60 times larger than KITTI, which contains 1.4\(M\) images (DB.). `\(\ast\)' denotes ran by ourselves. |

|

J. Baek, G. Kim, S. Park, H. An, M. Poggi, S. Kim MaskingDepth: Masked Consistency Regularization for Semi-supervised Monocular Depth Estimation. (hosted on ArXiv) |

AcknowledgementsThis template was originally made by Phillip Isola and Richard Zhang for a colorful ECCV project; the code can be found here. |